Augmented Reality (AR) With Planar Homographies

Published:

Blog Post #4

Introduction of Planar Homographies with its application in AR

In this project, we are going to implement an AR application step by step using planar homography. Before we step into the implementation, let me introduce some basics of planar homographies. We will find point correspondences between two images and use them to estimate their homography. Using this homography, we will then warp images and finally implement the fascinating AR application.

1.1: Introduction

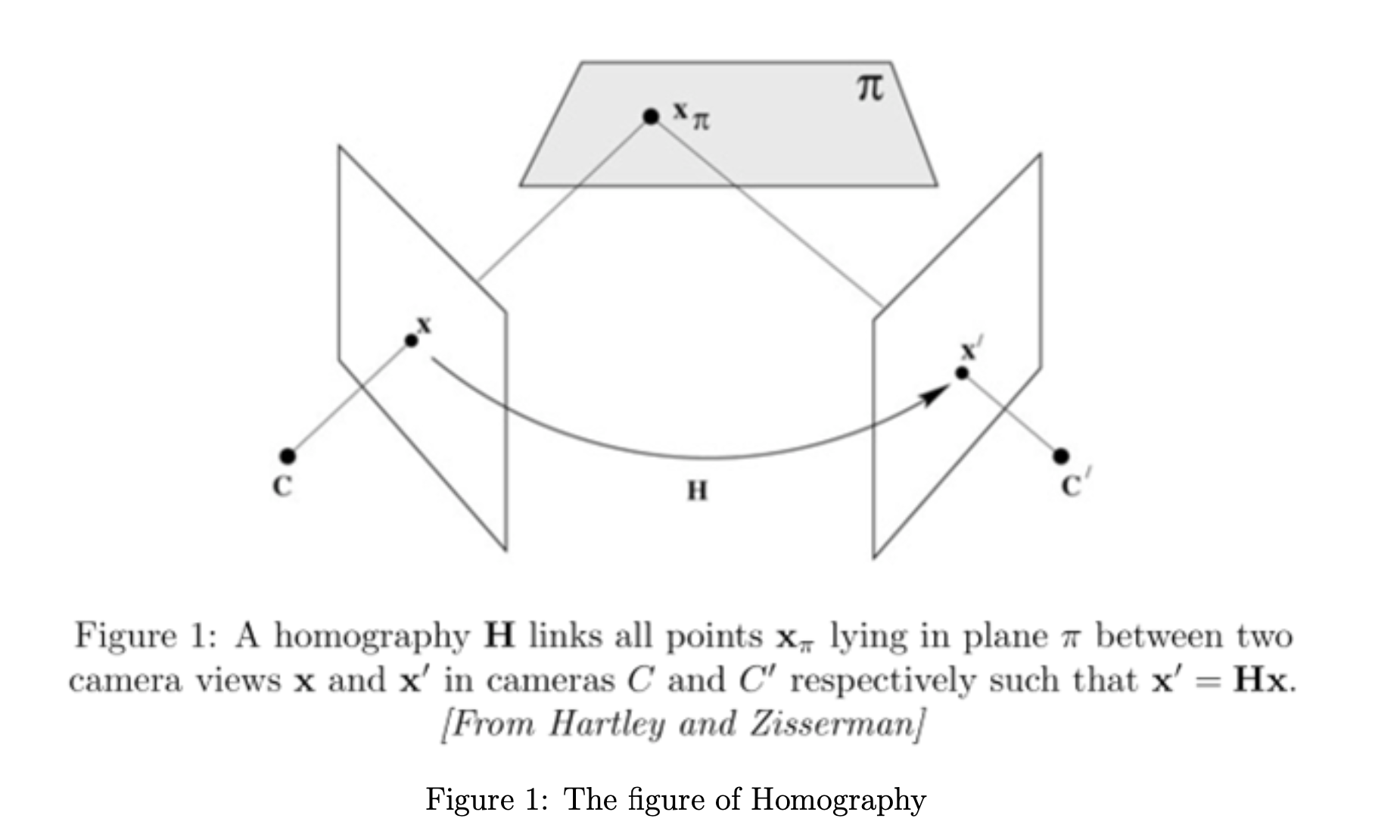

A planar homography is a warp operation (which is a mapping from pixel coordinates from one camera frame to another) that makes a fundamental assumption of the points lying on a plane in the real world. Under this particular assumption, pixel coordinates in one view of the points on the plane can be directly mapped to pixel coordinates in another camera view of the same points. See Figure 1 for more details.

1.2: Feature Detection, Description, and Matching

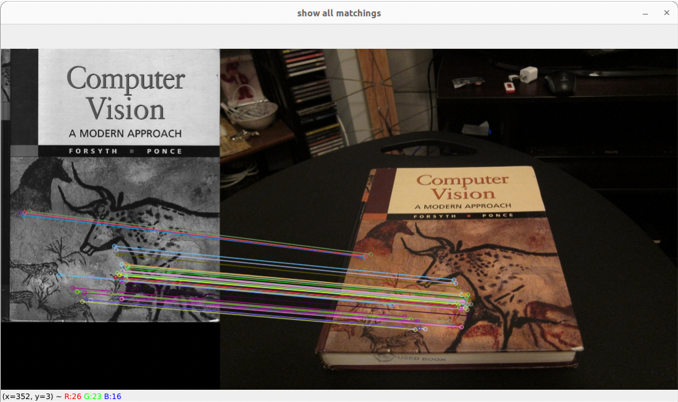

Before finding the homography between an image pair, we need to find corresponding point pairs between two images. I used the cv2.FastFeatureDetector_create() to detect FAST features then used cv2.xfeatures2d.BriefDescriptorExtractor_create() to obtain BRIEF descriptors, and finally, I used cv2.BFMatcher() to find correspondence. Figure 2 shows the matching results between the two images.

Figure 2

1.3: Rotation Angles Analyzation

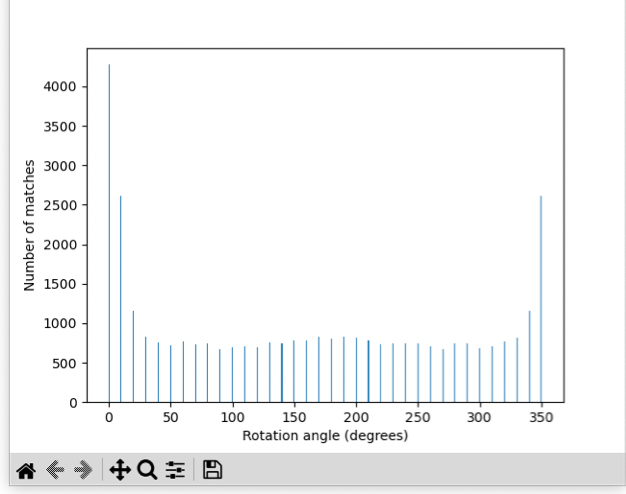

From the obtained histogram, we can see that there is a general trend: when rotation angles increase, the number of matches decreases. This is mainly because as the image rotates, the appearance of the features changes, making it more difficult for the feature detector to find matching features in the two images.

Figure 3: For example, when the rotation angle is 0, there are 4275 matches; when the rotation angle is 10, there are 2610 matches, when the rotation angle is 20, there are 1154 matches.

2.1: Image Warping

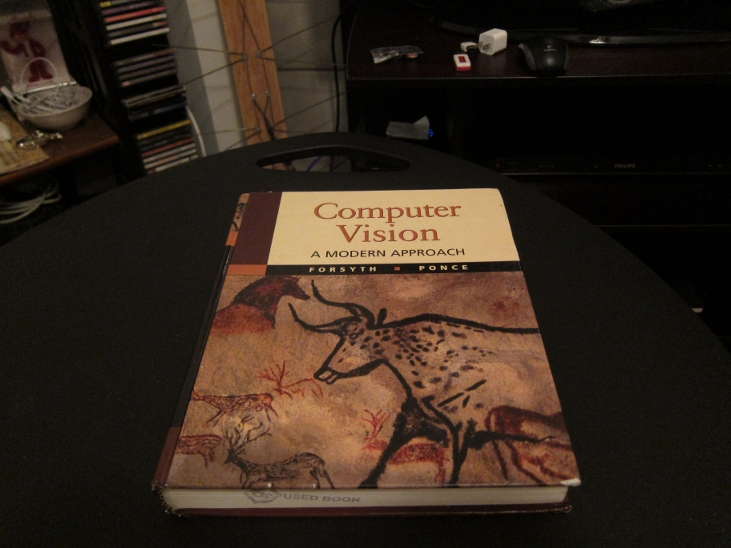

cv_cover

cv_desk

hp_cover

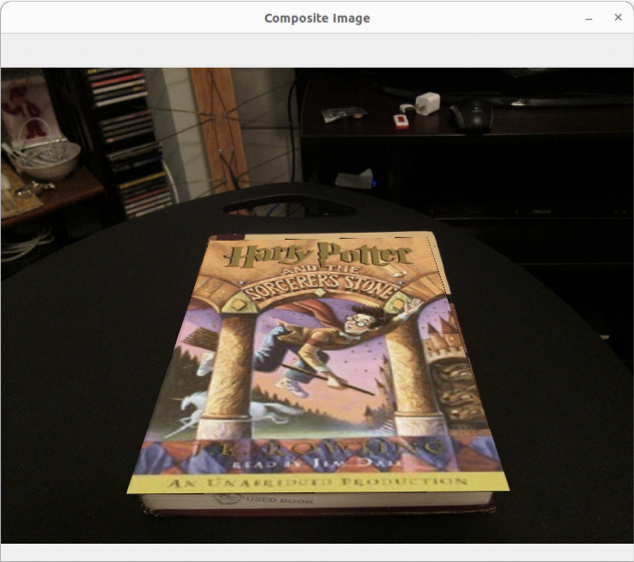

Matching results refer to Figure 1. We can now warp the hp_cover to overlay cv_desk with the estimated homography.

The composite image

2.2: AR video

The key idea is to track each frame in the source video and then overlaies each frame in the target video, resulting in AR vdieo, like a LifePrint Project. Note that Github does not support embedded videos, so I just use GIFs instead.

source video

target video

AR video

Hope you enjoy this :)

Reference:

Project 2 coursework from HKUST ELEC6910A - First Principles of Computer Vision, instructor: Prof. Ping TAN, 2023 Fall